Question Answering on Multiple Files with Llama 2 and RAG

In the previous post, we discussed the process of utilizing Llama 2 and retrieval augmented generation (RAG) for question answering. However, the method shared was designed for a single file, and in many scenarios, it's essential for the chatbot to have knowledge about all the information across multiple input files. This post will demonstrate how to achieve this capability with Llama 2 at no cost.

This post will show:

- Run Llama 2 with GPUs

- Create a vector store based on multiple files

- Question answering based on RAG with multiple files in the vector store

1 Get Llama 2 Ready

Firstly, install Python dependencies, download the Llama 2 model, and load Llama 2 model. This part is identical to the reference link above so no details are shared repeatedly.

!CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install --upgrade --force-reinstall llama-cpp-python --no-cache-dir

!pip install huggingface_hub chromadb langchain sentence-transformers pinecone_client

import numpy as np

import pandas as pd

from huggingface_hub import hf_hub_download

from llama_cpp import Llama

from langchain.llms import LlamaCpp

from langchain.chains import LLMChain

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.prompts import PromptTemplate

# Vector store

from langchain.document_loaders import DirectoryLoader, TextLoader

from langchain.embeddings.sentence_transformer import SentenceTransformerEmbeddings

from langchain.vectorstores import Chroma

# Show result

import markdown

!wget https://huggingface.co/TheBloke/Llama-2-7b-Chat-GGUF/resolve/main/llama-2-7b-chat.Q5_0.gguf

# for token-wise streaming so you'll see the answer gets generated token by token when Llama is answering your question

callback_manager = CallbackManager([StreamingStdOutCallbackHandler()])

llama_model_path = 'llama-2-7b-chat.Q5_0.gguf'

n_gpu_layers = 40 # Change this value based on your model and your GPU VRAM pool.

n_batch = 512 # Should be between 1 and n_ctx, consider the amount of VRAM in your GPU.

llm = LlamaCpp(

model_path=llama_model_path,

temperature=0.1,

top_p=1,

n_ctx=16000,

n_gpu_layers=n_gpu_layers,

n_batch=n_batch,

callback_manager=callback_manager,

verbose=True,

)2 Create Vector Database

Firstly, let's download some dataset:

!wget -q https://www.dropbox.com/s/vs6ocyvpzzncvwh/new_articles.zip

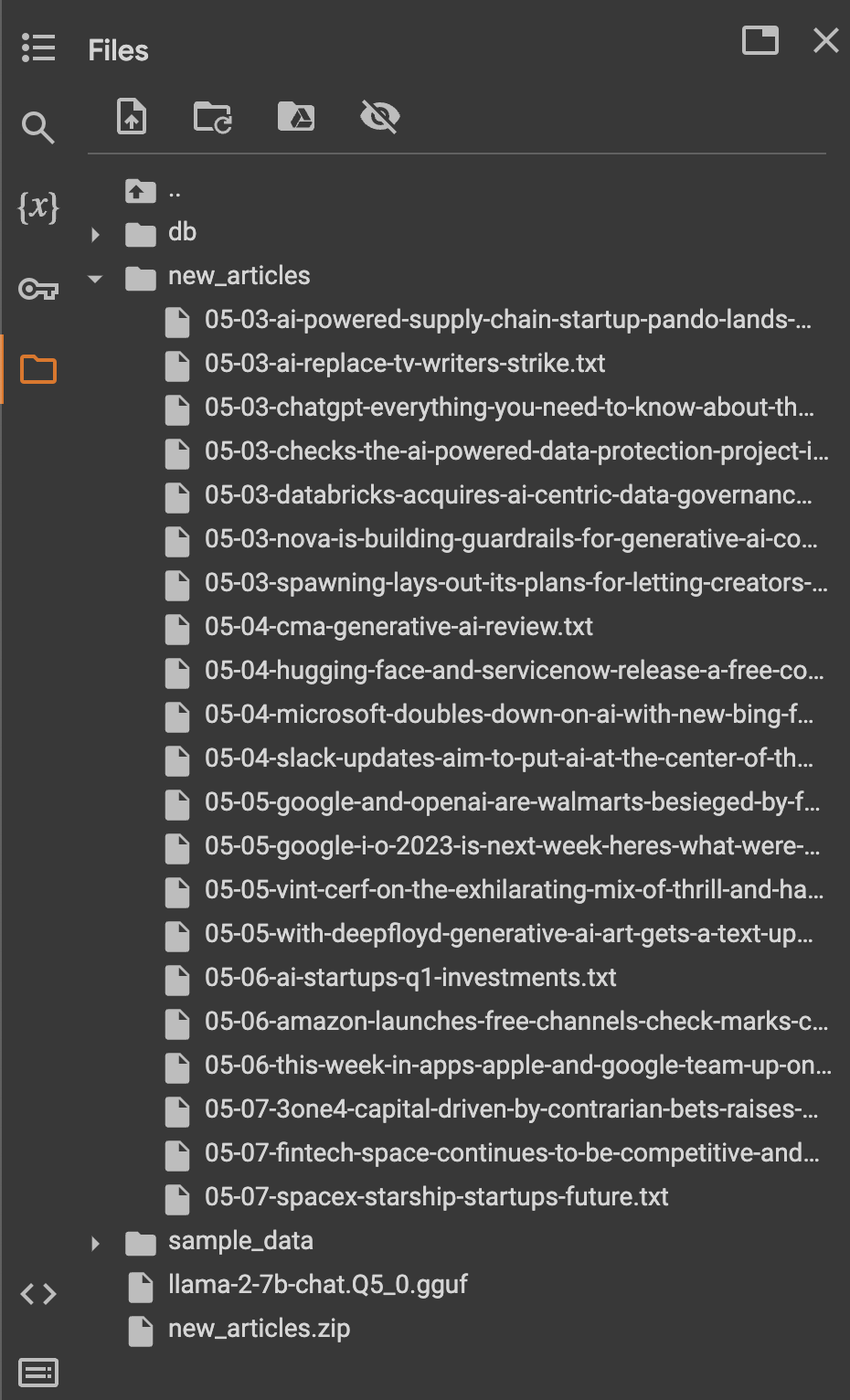

!unzip -q new_articles.zip -d new_articlesThese are a bunch of news text files:

2.1 Load Files

Load the files using DirectoryLoader made by LangChain:

from langchain.text_splitter import RecursiveCharacterTextSplitter

loader = DirectoryLoader('./new_articles/', glob="./*.txt", loader_cls=TextLoader)

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

texts = text_splitter.split_documents(documents)2.2 Create the Database

from langchain.embeddings import HuggingFaceEmbeddings

# Save the db in the disk

persist_directory = 'db'

# HuggingFace embedding is free!

embedding = HuggingFaceEmbeddings()

vectordb = Chroma.from_documents(documents=texts,

embedding=embedding,

persist_directory=persist_directory)You can save the database in the disk and load it back to the workflow in below ways:

vectordb.persist()

vectordb = None

vectordb = Chroma(persist_directory=persist_directory,

embedding_function=embedding)2.3 Make a Retriever

kvalue is a parameter to tweak per your use case.retriever = vectordb.as_retriever(search_kwargs={"k": 5})3 RAG

We then use RetrievalQA to retrieve the documents from the vector database and give the model more context on Llama 2, thereby increasing its knowledge.

Firstly, create the qa_chain:

# use another LangChain's chain, RetrievalQA, to associate Llama with the loaded documents stored in the vector db

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=retriever

)Then let's ask a few questions regarding the input documents, here comes the 1st question:

query = "Any news about Hugging Face and ServiceNow? Also include the source in the response."

llm_response = qa_chain(query)The result is like:

Hugging Face raised $35 million from investors including ServiceNow, according to TechCrunch on May 18, 2022. (Source: TechCrunch)Let's ask another question:

query = "Any news about Google IO 2023? Also include the source in the response."

llm_response = qa_chain(query)The answer to the 2nd question is:

Based on the provided context, it seems that Google IO 2023 is expected to announce new hardware, including a foldable smartphone called Pixel Fold, and possibly a budget device called Pixel 7a, as well as updates to Wear OS and developer tools. Additionally, there may be news about Google's AI plans, with generative AI (like Bard) appearing across Google's line of products. However, I don't know the exact details or timeline of these announcements, as the provided context only provides general information about what to expect from the conference.4 Summary

Up to this point, you can envision the possibilities that Llama 2 unlocks within this workflow, alongside other techniques highlighted in my blog. Notably, it encompasses:

- Swift inference powered by GPUs

- Thoughtful responses with appropriate prompts

- Question answering utilizing a knowledge database

- A user-friendly web interface

These building blocks empower developers to create more robust applications than ever before. Stay tuned for the unveiling of more exciting products!