Permutation Importance for Feature Selection

1 Introduction

In previous articles like Decision Tree Notes, some common feature selection techniques have been introduced. In this article, we will continue to focus on this topic and explain a new method for assessing feature importance: Permutation Importance.

2 Algorithm Deconstruction

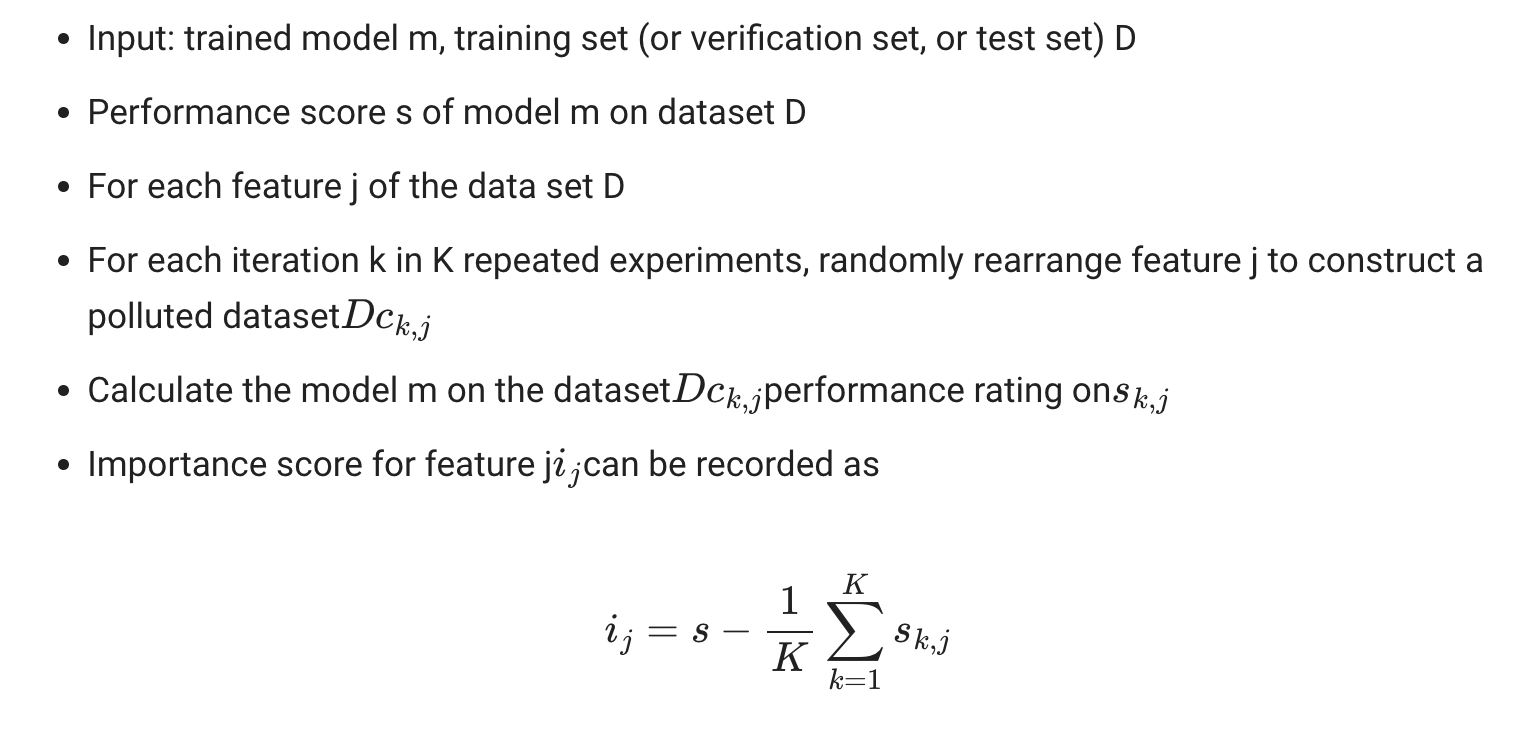

Permutation Importance is suitable for tabular data, and its assessment of feature importance depends on the extent to which the model performance score decreases when the feature is randomly rearranged. Its mathematical expression can be represented as:

3 Example Code

from sklearn.datasets import load_diabetes

from sklearn.model_selection import train_test_split

from sklearn.linear_model import Ridge

from sklearn.inspection import permutation_importance

diabetes = load_diabetes()

X_train, X_val, y_train, y_val = train_test_split(

diabetes.data, diabetes.target, random_state=0)

model = Ridge(alpha=1e-2).fit(X_train, y_train)

model.score(X_val, y_val)

scoring = ['r2', 'neg_mean_absolute_percentage_error', 'neg_mean_squared_error']

# The scoring parameter can include multiple calculation indicators at the same time. This is more efficient than using permutation_importance repeatedly, because the predicted value can be used to calculate different indicators.

r_multi = permutation_importance(model, X_val, y_val, n_repeats=30, random_state=0, scoring=scoring)

for metric in r_multi:

print(f"{metric}")

r = r_multi[metric]

for i in r.importances_mean.argsort()[::-1]:

if r.importances_mean[i] - 2 * r.importances_std[i] > 0:

print(f" {diabetes.feature_names[i]:<8}"

f"{r.importances_mean[i]:.3f}"

f" +/- {r.importances_std[i]:.3f}")

The output is:

r2

s5 0.204 +/- 0.050

bmi 0.176 +/- 0.048

bp 0.088 +/- 0.033

sex 0.056 +/- 0.023

neg_mean_absolute_percentage_error

s5 0.081 +/- 0.020

bmi 0.064 +/- 0.015

bp 0.029 +/- 0.010

neg_mean_squared_error

s5 1013.903 +/- 246.460

bmi 872.694 +/- 240.296

bp 438.681 +/- 163.025

sex 277.382 +/- 115.126

4 Conclusion

Compared with tree models, feature importance is usually judged based on the decrease in impurity, which is usually based on the training set. When the model is overfitting, the importance of features is misleading. In this case, seemingly important features may not have satisfactory predictive power for new data encountered by the model online.

At the same time, feature importance based on reduction in impurity is easily affected by high-cardinality features, so numerical variables often rank higher. In contrast, Permutation Importance has no bias towards model features and is not limited to specific model types, so it has a wide range of applications. Please note that if the features have strong multicollinearity, it is recommended to take only one important feature. The method can be viewed in this example.

At the same time, Scikit Learn also provides an intuitive example to demonstrate the difference between feature importance based on impurity reduction and Permutation Importance.

Hope this sharing is helpful to you, and welcome to leave comments for discussion!