Build a macOS App Powered by Llama 2 in Three Steps

Generative AI has been generating a lot of buzz lately, and among the most discussed open-source large language models is Llama 2 made by Meta. Recently, the renowned Hugging Face team introduced a tool enabling the utilization of large language models within MacOS applications. This post aims to guide you through the process of integrating and leveraging Llama 2 effortlessly, empowering you to build your own applications seamlessly.

1 Prerequisite

In order to run the steps in this post, it is suggested that you have:

- A computer running macOS

- Git, or GitHub client

- Xcode, available for free in macOS App Store

- Installed a few dependent Python packages

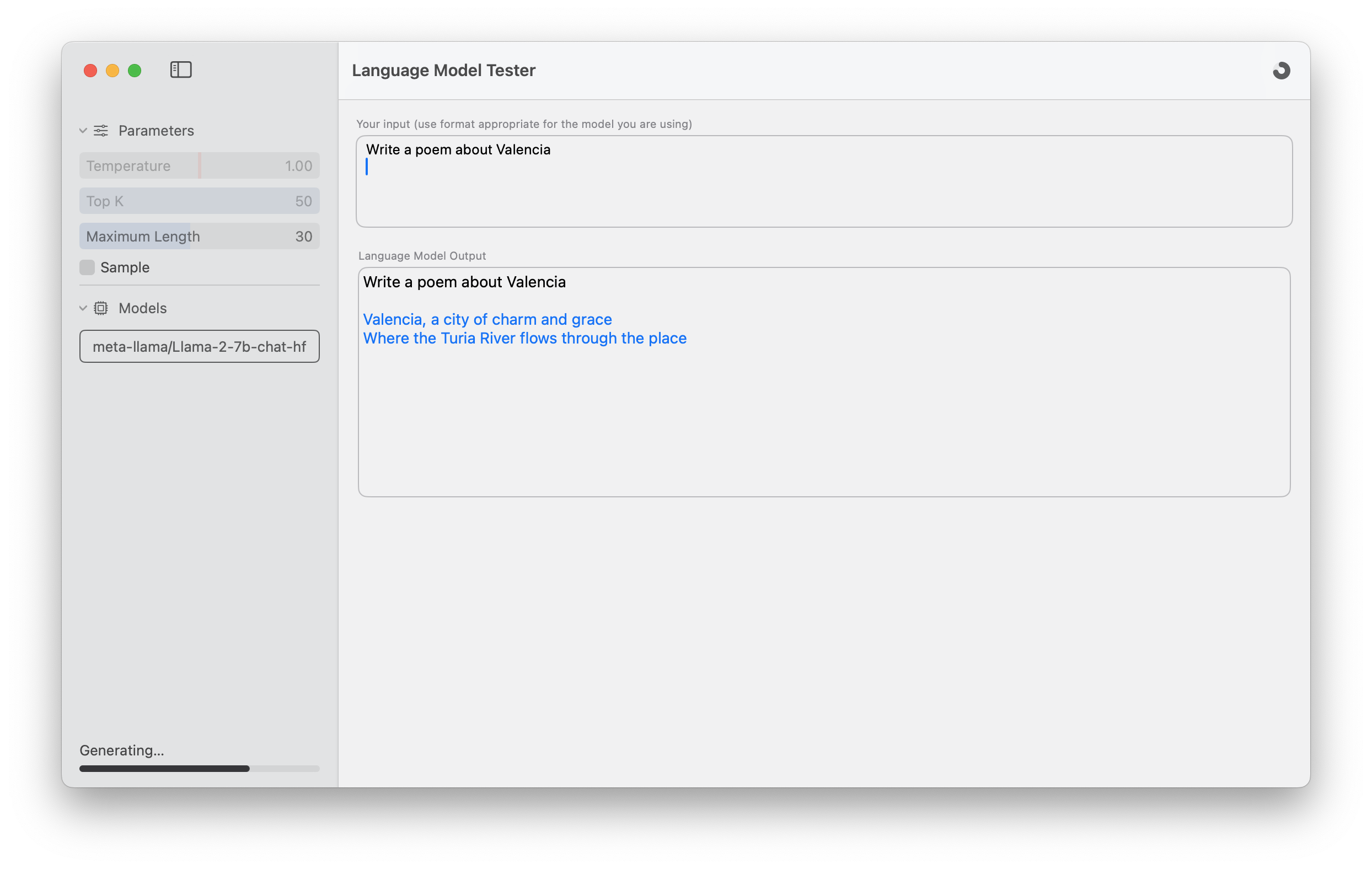

Following the steps, you can build an macOS app that you can interact with like below:

2 Swift-Chat Code

Clone the Swift-chat GitHub repo by using below command:

git clone https://github.com/huggingface/swift-chatIf you are not familiar with Git/GitHub, kindly go to this tutorial:

3 Xcode Build

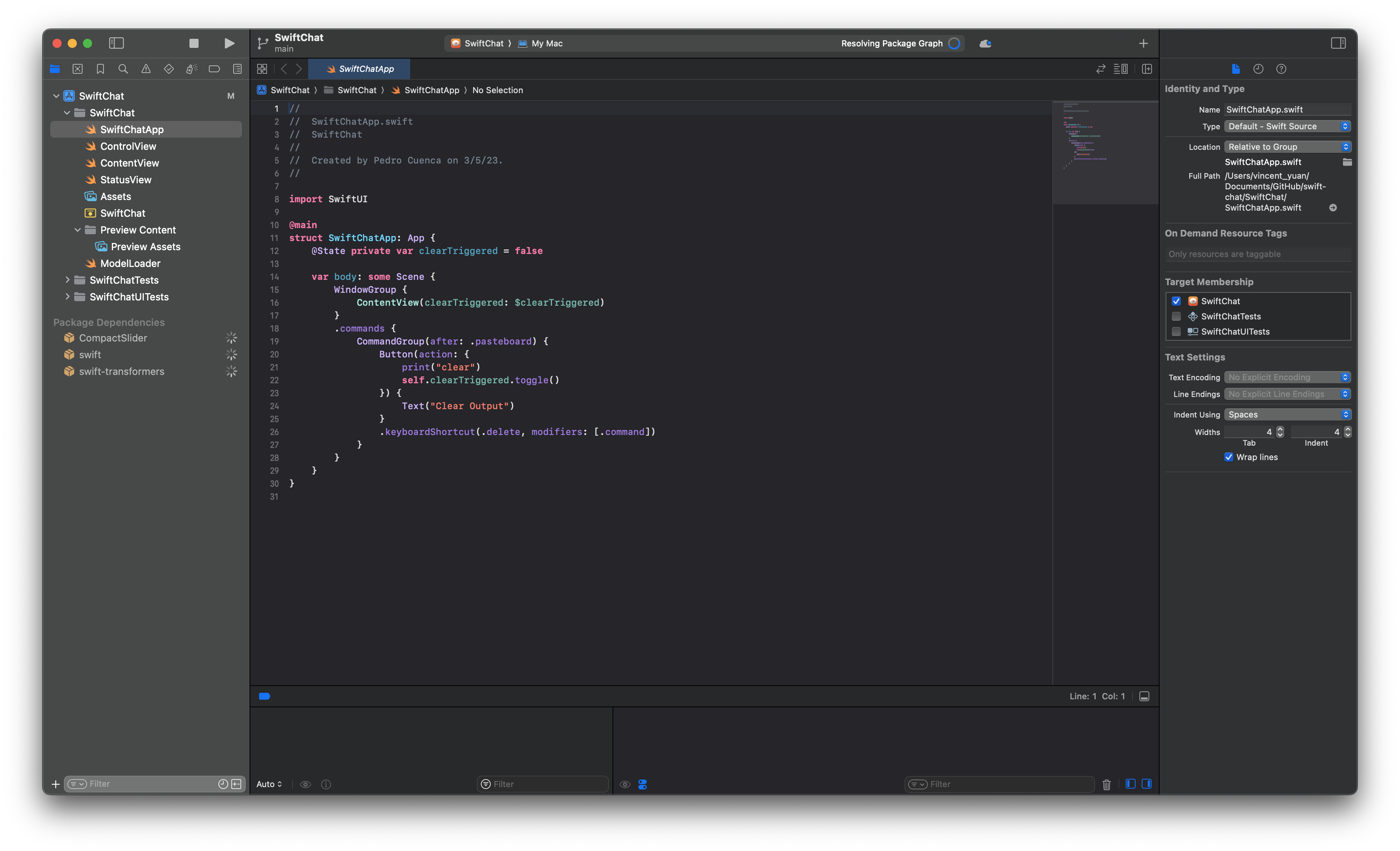

Use Xcode to open the project file - SwiftChat.xcodeproj in that Git repo cloned in the last step:

Click the play button on the top left to build the project and the app will show up.

4 Download Llama 2 CoreML Model

A CoreML model is required to be loaded into the app, there are many ways to convert a PyTorch/TensorFlow models into a CoreML model as quoted below:

1. Use the transformers-to-coreml conversion Space:This is an automated tool built on top of exporters (see below) that either works for your model, or doesn't. It requires no coding: enter the Hub model identifier, select the task you plan to use the model for, and click apply. If the conversion succeeds, you can push the converted Core ML weights to the Hub, and you are done!2. Useexporters, a Python conversion package built on top of Apple'scoremltools(see below).

This library gives you a lot more options to configure the conversion task. In addition, it lets you create your own conversion configuration class, which you may use for additional control or to work around conversion issues.

3. Use coremltools, Apple's conversion package.This is the lowest-level approach and therefore provides maximum control. It can still fail for some models (especially new ones), but you always have the option to dive inside the source code and try to figure out why.

But per my experiment, the easiest way is to download the Llama 2 CoreML model from Hugging Face as below:

4.1 Install huggingface_hub

In the terminal, run below command to install huggingface_hub:

pip install huggingface_hub4.2 Download the Llama 2 CoreML Model

Once the huggingface_hub is installed, you can use the huggingface_cli to download the model:

huggingface-cli download --local-dir-use-symlinks False --local-dir ~/Download/Llama-2-7b-chat-coreml coreml-projects/Llama-2-7b-chat-coremlYou will be asked to provide the Hugging Face token if you do not have one, just click the link in the output and generate that token, then put it in the terminal, also, you can tweak the path to save the model by altering the value behind the --local-dir parameter.

5 Run the App

If the preceding steps have been successful, the app's user interface should now be visible. Proceed by clicking the button located in the left sidebar to load the model, enabling you to enjoy utilizing your personalized application!

This additional information serves to enhance the details not covered in the original post, simplifying the process. Much credit goes to the Hugging Face team for their remarkable efforts in this endeavor—kudos to them!

Reference

Post regarding the release of Swift Transformers:

Llama 2 CoreML model made by Hugging Face